Based on that one Senate hearing, it looks like big companies like Facebook, Discord and Twitter are aiming for the maximum percent of false positives and false negatives when it comes to CSAM.

The only thing I know about that screenshot is that it used to say “show results anyway” which is probably worse in most cases

“LOOK!! WE’RE ACTUALLY DOING SOMETHING!!! ALL OUR USERS ARE NOT PDF FILES!”

with any luck this will destroy them and funnel disgruntled users our way, where the servers are too numerous to ever fully take down and many aren’t even US based anyways

Unfortunately, I don’t think so. Most of the politicians were virtue signaling, asking questions that were impossible and demanding timetables that they weren’t going to get anyway. One woman actually had some half decent data prepared, but I don’t think anybody else was really taking it seriously.

Now if there was some legislation passed, specifically stuff that wasn’t KOSA, that would be something else. KOSA seems prepped to simply destroy free speech on the internet, and it would mostly harm smaller social media networks that don’t have lawyers and around-the-clock moderators to police every single comment and post.

16% is pretty good. the ones at three to one percent are the weirdos.

I really hate and avoid when my phone switches into battery saver at 15%, so in my mind 16% is like 1%

My skin crawls if it goes below 30%.

You ever seen a phone at 0%?

Removed by mod

To prolong your battery’s lifespan you shouldn’t let it drain below 20%.

Don’t phone battery indicators lie to you now so that 0% displayed is actually about 20% specifically because of this?

Yes and 100% isn’t 100%

People and their batteries though… It’s a futile obsession for some. It doesn’t matter how much science or logic you throw at them there’s always something.

Like how fast charging hasn’t for some time done like a full max rate for the entire time to keep heat within tolerances but still some people think doing the work themselves is somehow better thermal management than modern battery controllers to the point they think it will make a material difference.

For a phone, you’re probably going to keep it for less than 5 years, so babying the battery really isn’t worthwhile since the battery will probably outlast how long you keep your phone for if you just charge overnight every night or fully charge it daily

Though some of the phone makers are finally getting the message that some of us want to keep a hold of our expensive phones for a long while. My new Pixel 8 has 7 years of security updates, which should work fine for my purposes. I’ll probably replace the battery somewhere in there, though.

Also charging it fully but I don’t know how important that one is.

Very important. Keep it between 20-80 is a good idea. I differs between different battery chemistries though.

Just as important. And most phones these days have a setting to prevent it from charging to 100%. E.g. I set mine to stop at 90%.

I run grapheneos which doesn’t have that. I think if I get a smart plug I could use an automation in Home Assistant to turn the charger off.

For lithium batteries (phone batteries) it’s actually more important than draining to 0. Many studies indicate that the average phone battery should last several thousand cycles while only losing 5-10% of total capacity provided it is never charged above 80%. Minimum % (even down to 0%) and charge rate below 70% is also unrestricted.

The tl;dr is that everytime you charge to 100% is the same as 50-100 charges to 80%. Draining a lithium chemistry battery to 0 isn’t an issue as long as you don’t leave it in a discharged state (immediately charging).

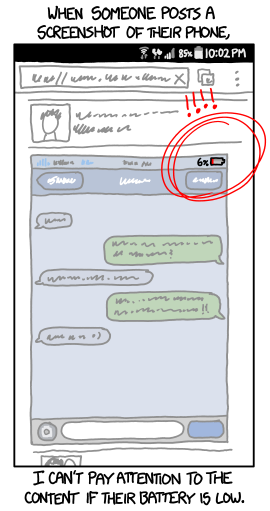

Here’s a hot tip. If you’re on android, open the developer settings and turn on “demo mode” before taking screenshots. It makes the battery and signal display as 100% so you don’t get judged by internet commenters who don’t go outside.

Or you click that little edit button and crop the top of the image completely off.

so you don’t get judged by internet commenters who don’t go outside.

I also go outside. I just stop using my Phone at 30% to preserve Battery. That’s why I judge these People. Turn it off and preserve the Battery.

My phone is constantly either dead or under 40%

your cue to stop using it as soon as it drops to 30%

Are you trapped in the wilderness? If you’ve been abducted by hyenas, hoot like an owl twice.

hoot hoot wolves hoot hoot not hyenas

Removed by mod

Attribution since poster forgot.

I literally used the embed link from XKCD’s website

That’s just for embedding the image, not citing. If it’s not clickable by the end user, then an embed link is not a source, merely a delivery method.

That’s a problem with your client, not the link

Perhaps. Regardless, if it’s a limitation that most people’s clients face, then it is not a reliable method of attribution. Either way, most people just put the source in the title or comment. It’s more reliable. ¯\_ (ツ) _/¯

I have no way of seeing that on my client. Do you see that information on your’s?

Not directly on the rendered comment, but if you view markdown for my comment you can see the link to the source.

No such option sadly.

Edit: select text does it.

Ignorance is bliss

I reported loads of content on Instagram, genuinely creepy accounts of “athletic teens” and they all got rejected.

I got caught in a horrible recommendations loop because I’d like family photos of running and gymnastics for my nieces and cousins.

got caught in a horrible recommendations loop because I’d like family photos of running and gymnastics for my nieces and cousins.

I never reach that point on Facebook. I scroll for about 5 posts to see what my family and friends might be up to and get too frustrated with unmoderated spam and report it as spam and close the tab and move on

Removed by mod

Honestly they are all bad

Removed by mod

Yeah I disagree. Ticktok content is way to short to have any meaningful impact. Its just dopamine over and over.

Removed by mod

Yeah and nobody in their right mind would ever try to use Vine for journalism. I’m confused why you think Tiktok is different.

Removed by mod

Ah, I didn’t realize you could use Ticktok in the browser. Anyway I personally won’t use such platforms as they are way to addicting for me.

Removed by mod

One the biggest problems with the internet today is bad actors know how to manipulate or dodge the content moderation to avoid punitive consequences. The big social platforms are moderated by the most naive people in the world. It’s either that or willful negligence. Has to be. There’s just no way these tech bros who spent their lives deep in internet culture are so clueless about how to content moderate.

I know them. I worked in this industry. They’re not naive. What basis do you have for these comments?

I think you’re conflating with business executives running said social and gaming companies. Stop calling them techbros. Meta is not a tech startup. They’re a transnational corporation. They have capitalist execs running the companies.

Indie megacorp buying their first nation just a startup

Musk

bad actors know how to manipulate or dodge the content moderation to avoid punitive consequences.

People have been doing that since the dawn of the internet. People on my old forum in the 90s tried to circumvent profanity filters on phpBB.

Even now you can get round Lemmy.World filters against “fag-got” by adding a hyphen in it.

Nothing new under the sun.

The thing is that words can have a very broad range of meaning depending on who uses them and how (among many other factors), but you can’t accurately code all of that into a form that computers can understand. Even ignoring bad actors it makes certain things very difficult, like if you ever want to search for something that just happens to share words with something completely different which is very popular.

Yeah I think this applies to the fediverse as well.

Auto-moderation is both lazy and is only going to get worse. Not saying there isn’t some value on things being hard-banned (like very specific spam like shit that just keeps responding to everything with the same thing non-stop). But these mega outlets/sites want to just use full automation to ban shit without any human interactions. At least unless you or another corp has connections on the inside to get a person or people to fix it. Just like how they make it so fucking hard to ever reach a person when calling (or trying to even find) a support line.

This automated shit just blacklists more and more shit and can completely fuck over people that use those sites for income (and they even can’t reach a person when their income is cut off for false reasons and don’t get back-pay for the period of a strike/ban). The bad guys will always just keep moving to a new word or phrase as the old ones get banned. So we as users are actually losing words and phrases and the actual shit is just on to the next one without issues.

That’s what you get for all the teabagging you’ve been doing…

I had a post of mine flagged for multiple days on there because it had an illustration of a woman in a full length wool coat completely covering her and not in any way sexual. Shit is so stupid

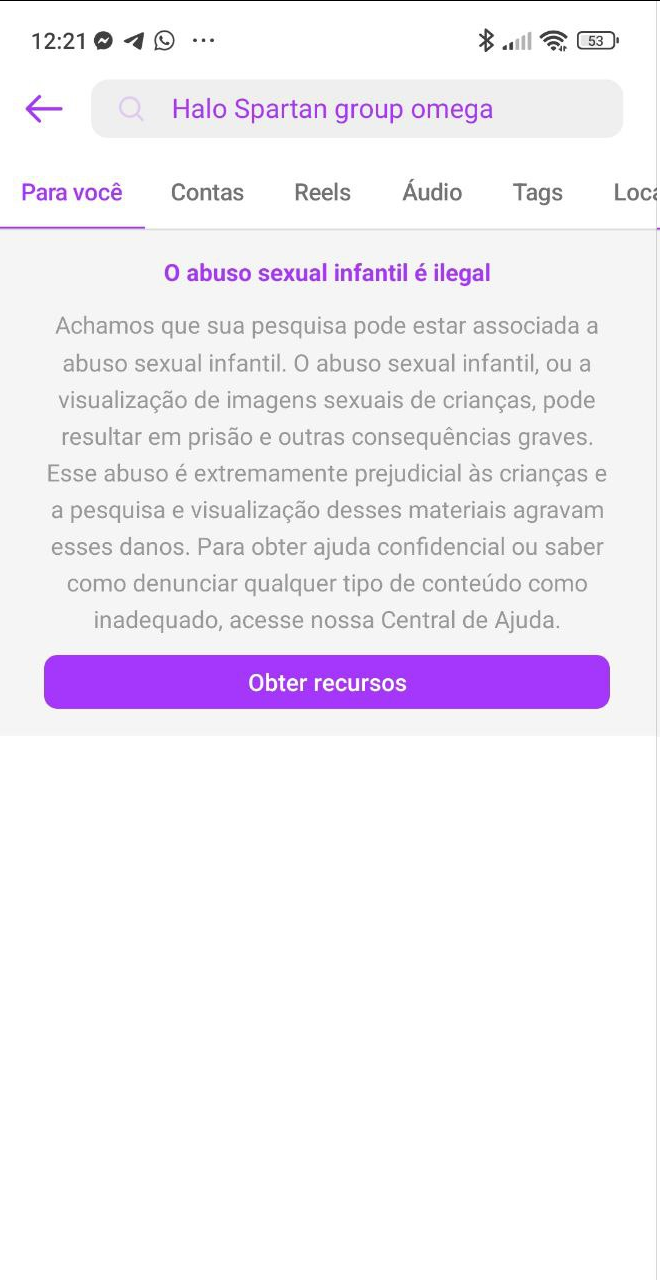

How do we know they didn’t type something more explicit to get the result and just change what’s in the search bar? Has anyone verified this?

I actually don’t know, I’m not sure it is possible (I never used Instagram, the search might be auto-submitting for all I know) but intentionally flagging yourself as potential child abuser, for clout, is a bit extreme…

just did it, it’s real.

Nutty thank you for putting yourself on the list for science.

Barely 2 years ago I noticed that people were posting porn on Insta, and it was publicly visible just because they tagged #cum as #cüm. I don’t think this is possible now, but basically corporations are dumb and people posting disallowed content can be creative as hell.

ah yes, cumlauts

basically corporations are dumb and people posting disallowed content can be creative as hell.

I generally get the feeling with this kind of thing that it’s not incompetence but either an unwillingness to act quickly or an inability to

Well, the Spartans were pederasts…

Your post has been banned under the provisions of the “it’s bad to throw disabled babies off a cliff act”.

Yet another thing that woke moralists took from us smh

It’s dumb, but it’s also possible that a combination of those terms hads been adopted by some group distributing CSAM.

At one point, “cheese pizza” was a term they apparently used on YouTube videos etc due to it having the same abbreviation as CP (Child Pornography).

Sick fucks ruining everything for everyone

I agree with you is the TL;DR, and the rest is just my mad ranting opinions about companies being allowed to just auto-censor us. So feel free to completely ignore the rest. lol.

It is like just banning words and phrases just because bad people use them has just become the norm. I really really can’t stand the way that channels on YT constantly have to self-censor basically everything (even if the video is just reporting on or trying to explain bad shit that is or has happened). And it never seems to actually stop the actual issues from happening. Just means the bad people just move on to a new word or phrase that is then itself banned. It isn’t about actually stopping fucked-up shit from happening. It is just about making sure advertisers and other sources of money don’t throw a fit.

We always hear about how places like China are bad in-part for censoring words and speech. But in the US and other western nations we pretend we are allowed to freely speak uncensored. We have always had censoring of speech, it is just that the real rulers of the country are allowed to do it instead. Keeps the government’s hands free from legally being the enforcers of doing it to us. Shit like CP is fucked, and it should be handled for what it is, but allowing for-profit companies and especially their algorithms/AI to decide what we can and can’t say or search for without any level of human interactions that very much lead to false bans is also fucked.

It is waaaay too easy for all the mega corps to completely take down channels and block creators from revenue of their own work just completely automated. But the accused channel can’t ever get a real person to both get clear understanding of what and who is attacking them, and to explain why their strike/bans aren’t valid. I have heard that even channels that have gotten written/legal permission from a big studio to use a clip of music or segment from video (music being the worst) will STILL catch automated strikes for copyright violations.

We don’t need actual government censors, because the mega corps with all the money are allowed to do it for them. We have rights but they don’t really matter if they can say a private company or org made up of people from various mega corps are allowed to do it for them.

At one point, “cheese pizza” was a term they apparently used on YouTube videos etc due to it having the same abbreviation as CP (Child Pornography).

This in turn was why the Podesta emails led to the whole pizza gate thing - there were a bunch of emails with weird phrasings like going to do cheese pizza for a couple of hours that just aren’t how people talk or write and so internet weirdos thought it was pedo code and then it kinda went insane from there.

Remember, searching for “halo” is banned because it could potentially be linked to pedophilia, but editing a video of the president to look like a pedophile is fine because “it wasnt done with AI.”

OOTL – what happened there?

Biden was edited to look like he was groping his granddaughter for an extended amount of time instead of quickly putting a pin above her breast. It was posted to Facebook/Instagram/Meta. AI wasn’t used.

The spartans were children at one point

Here is an alternative Piped link(s):

https://piped.video/RybNI0KB1bg

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

We beat KOSA before, we can beat it again. Contacting your reps matters. Voting matters, especially in primaries and locals. So does being active politically in other ways.

I’m not familiar with American stuff, what is KOSA?

It’s the “Kids Online Safety Act”. Basically it’s using the old “think of the children!” move, but in reality conservatives are trying to push anything queer back into the dark.

ahhh, so ted cruz did do something good