I’m uncertain if deepfake porn should be banned; it’s already difficult to enforce copyright on the internet, because it’ll just get redistributed.

Similarly, it would be impossible to enforce restrictions on deepfake porn; because it can easily be redistributed.

It’s also sexual abuse, on the same level of taking a nude picture without their knowledge and posting it publicly.

That already two crimes: taking an intimate picture without consent, and sharing an intimate picture without consent. So why aren’t people getting punished?

You realize people are born naked, right? The whole concept that someone being seen “uncovered” is somehow sexual, or that the act of uncovering is somehow a sexual invitation is itself rape culture.

Just as much as men should be allowed to wear dresses, and women wear pants, people should be able to remain or become naked and not have it mean anything.

Of course self-determination is implicit here. So what of deepfakes then, where a man is say photoshopped into a dress? Does that mean his rights have been violated, because people might believe that he intended to present himself that way to the world? I say no.

People try to picture random people they come across in public naked all the time. So what if they were a great artist and could draw or paint a hyper realistic representation of what they imagine? What if one day we have the technology to pull an image from someone’s mind? Is this person now committing a thought crime? Does it only become a crime when it is shared with another person?

TLDR people need to be less repressed, not more. Repression is what gets us to crazy accusations of thought crimes and abuse-by-proxy.

I have no clue how you came to conclusion that slander & sexual harrasment makes someone less repressed.

They can get naked after people stop getting horny.

THIS

I wouldn’t assume that the source image was taken illegally; it could be a picture that the person consented. (e.g A married couple consenting to getting a photo shoot together.)

What if the “victim” was accidentally framed in the picture?

Also, there could be a danger of someone falsely claiming that a video involves a deepfake of a victim.

Yes, but unless they consented specifically to having porn made from those images, that’s still highly problematic.

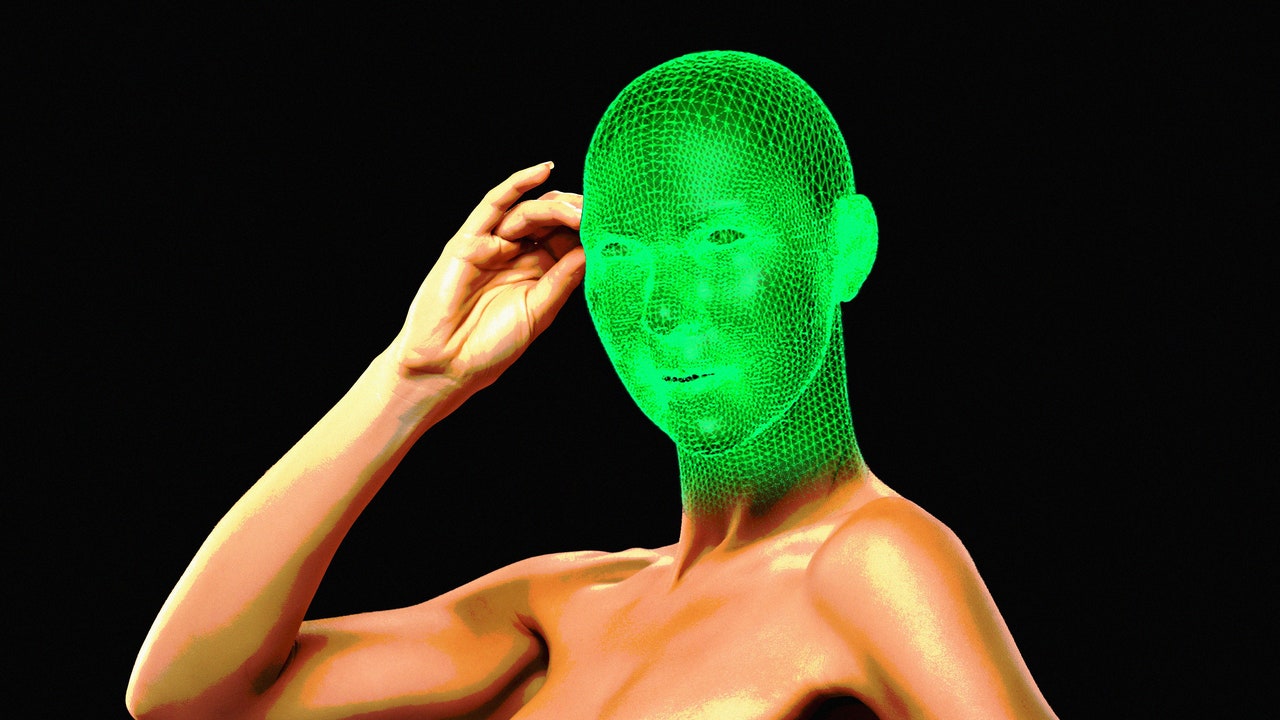

But only because that’s where technology is today. You need many images of the face at many angles, and it is only a face swap of real video that was captured.

Within 50 years it will be possible to create complete fakes from whole cloth. No images, no video as a source. Only a person’s imagination. At that point it is equivalent of cartoons.