Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Semi-obligatory thanks to @dgerard for starting this)

In other news, Hindenburg Research just put out a truly damning report on Roblox, aptly titled “Roblox: Inflated Key Metrics For Wall Street And A Pedophile Hellscape For Kids”, and the markets have responded.

TIL Roblox is listed on the fucking stock market.

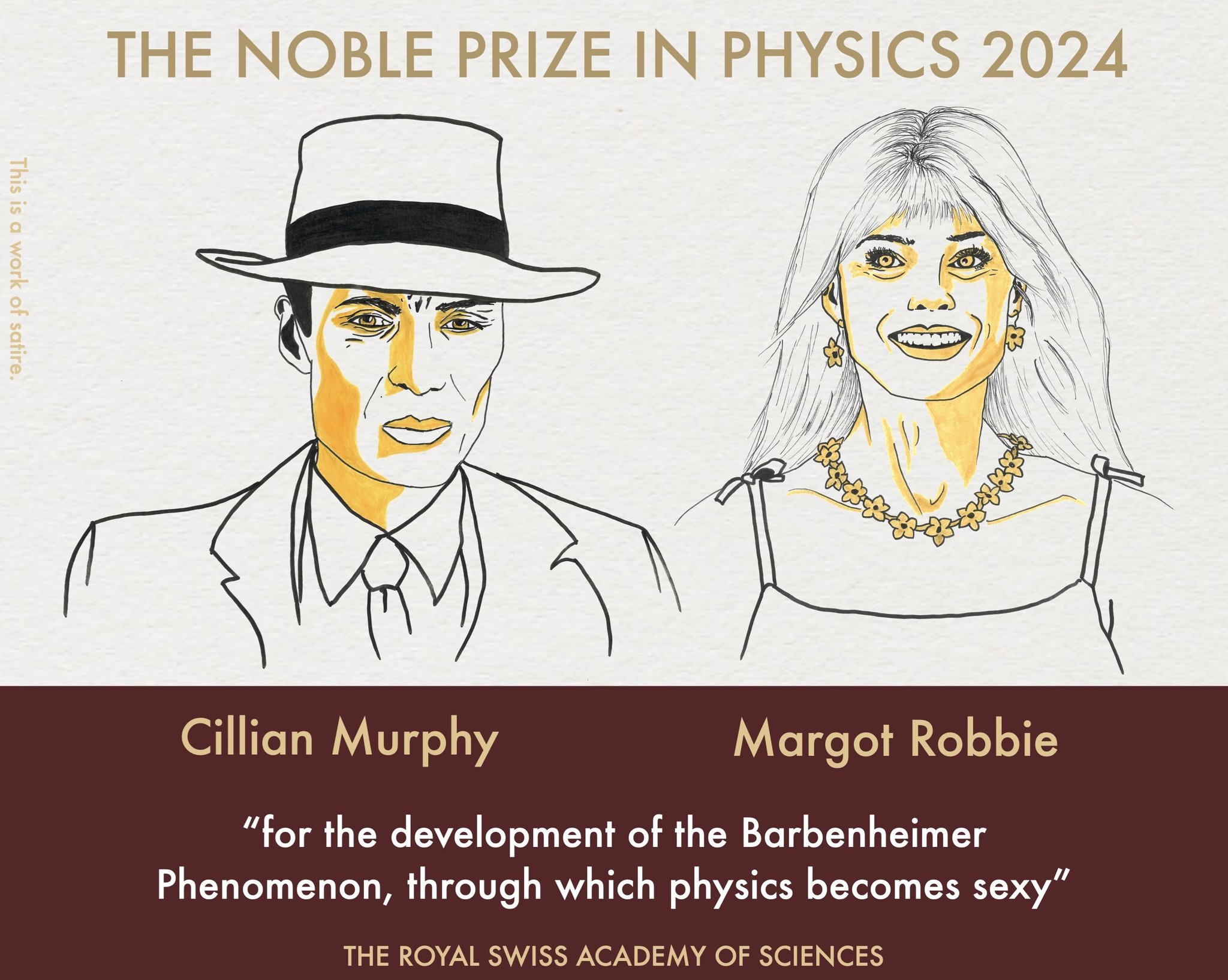

i wouldn’t want to sound like I’m running down Hinton’s work on neural networks, it’s the foundational tool of much of what’s called “AI”, certainly of ML

but uh, it’s comp sci which is applied mathematics

how does this rate a physics Nobel??

They’re reeeaallly leaning into the fact that some of the math involved is also used in statistical physics. And, OK, we could have an academic debate about how the boundaries of fields are drawn and the extent to which the divisions between them are cultural conventions. But the more important thing is that the Nobel Prize is a bad institution.

a friend says:

effectively they made machine learning look like an Ising model, and you honestly have no idea how much theoretical physicists fucking love it when things turn out to be the Ising model

does that match your experience? if so i’ll quote that

That sounds about right, yeah.

lol holy shit actual tweet

Image description: tweet from the official Nobel Prize account. Text reads,

Congratulations to our 2024 medicine laureate Victor Ambros ✨

This morning he celebrated the news of his prize with his colleague and wife Rosalind Lee, who was also the first author on the 1993 ‘Cell’ paper cited by the Nobel Committee.

#NobelPrize

Blake reaction description: sighing and muttering, “yep, assholes will asshole”

Wow. Congratulations to Rosalind Lee for her colleague and husband’s nobel. I can only dream of one day having my spouse be recognized with such prestigious accolade for something we have done.

yeah, takes from physicists i know range from “wtf” to “it’s plaaausible with a streeetch”

looking through the committee, I see Ulf Danielsson is notable on AI mostly for being skeptical (he writes pop sci books so people ask him about all manner of shit)

https://www.bbc.com/news/articles/c62r02z75jyo

It’s going to be like the Industrial Revolution - but instead of our physical capabilities, it’s going to exceed our intellectual capabilities … but I worry that the overall consequences of this might be systems that are more intelligent than us that might eventually take control

😩

Getting a head start on that Nobel disease.

This work getting the physics nobel for “using physics” is reeeeeeal fuckin tangential

Also: TL note: 11,000,000 Swedish Krona equals 11,386,313.85 Norwegian Krone

the mozilla PR campaign to convince everyone that advertising is the lifeblood of commerce and that this is perfectly fine and good (and that everyone should just accept their viewpoint) continues

We need to stare it straight in the eyes and try to fix it

try, you say? and what’s your plan for when you fail, but you’ve lost all your values in service of the attempt?

For this, we owe our community an apology for not engaging and communicating our vision effectively. Mozilla is only Mozilla if we share our thinking, engage people along the way, and incorporate that feedback into our efforts to help reform the ecosystem.

are you fucking kidding me? “we can only be who we are if we maybe sorta listen to you while we keep doing what we wanted to do”? seriously?

How do we ensure that privacy is not a privilege of the few but a fundamental right available to everyone? These are significant and enduring questions that have no single answer. But, for right now on the internet of today, a big part of the answer is online advertising.

How do we ensure that traffic safety is not a privilege of the few but a fundamental right available to everyone? A big part of the answer is drunk driving.

How do we prevent huge segments of the world from being priced out of access through paywalls?

Based Mozilla. Abolish landlords. Obliterate the commodity form. Full luxury gay communism now.

What a pisstake. Shit I don’t want to use palemoon, are there any other browsers???

the purestrain corporate non-apology that is “we should have communicated our vision effectively” when your entire community is telling you in no uncertain terms to give up on that vision because it’s a terrible idea nobody wants

“it’s a failure in our messaging that we didn’t tell you about the thing you’d hate in advance. if we were any good we would’ve gotten out ahead of it (and made you think it’s something else)”

and the thing is, that’s probably exactly the lesson they’re going to be learning from this :|

Don’t know how much this fits the community, as you use a lot of terms I’m not inherently familiar with (is there a “welcome guide” of some sort somewhere I missed).

Anyway, Wikipedia moderators are now realizing that LLMs are causing problems for them, but they are very careful to not smack the beehive:

The purpose of this project is not to restrict or ban the use of AI in articles, but to verify that its output is acceptable and constructive, and to fix or remove it otherwise.

I just… don’t have words for how bad this is going to go. How much work this will inevitably be. At least we’ll get a real world example of just how many guardrails are actually needed to make LLM text “work” for this sort of use case, where neutrality, truth, and cited sources are important (at least on paper).

I hope some people watch this closely, I’m sure there’s going to be some gold in this mess.

The purpose of this project is not to restrict or ban the use of AI in articles, but to verify that its output is acceptable and constructive, and to fix or remove it otherwise.

Wikipedia’s mod team definitely haven’t realised it yet, but this part is pretty much a de facto ban on using AI. AI is incapable of producing output that would be acceptable for a Wikipedia article - in basically every instance, its getting nuked.

lol i assure you that fidelitously translates to “kill it with fire”

Yeah, that sounds like text which somebody quickly typed up for the sake of having something.

it is impossible for a Wikipedia editor to write a sentence on Wikipedia procedure without completely tracing the fractal space of caveats.

I’d like to believe some of them have, but it’s easier or more productive to keep giving the benefit of the doubt (or at at least pretend to) than argue the point.

Welcome to the club. They say a shared suffering is only half the suffering.

This was discussed in last week’s Stubsack, but I don’t think we mind talking about talking the same thing twice. I, for one, do not look forward to browsing Wikipedia exclusively through pre-2024 archived versions, so I hope (with some pessimism) their disapponintingly milquetoast stance works out.

Reading a bit of the old Reddit sneerclub can help understand some of the Awful vernacular, but otherwise it’s as much of a lurkmoar as any other online circlejerk. The old guard keep referencing cringe techbros and TESCREALs I’ve never heard of while I still can’t remember which Scott A we’re talking about in which thread.

Scott Computers is married and a father but still writes like an incel and fundamentally can’t believe that anyone interested in computer science or physics might think in a different way than he does. Dilbert Scott is an incredibly divorced man. Scott Adderall is the leader of the beige tribe.

Scott Adderall

You Give Adderall A Bad Name

shit wasn’t there another one

There is always another Scott.

you know, one of the most abusive shitty people I’ve ever personally known was also a Scott

Montgomery Scott clearly statistical anomaly

Ah, but he’s not really a Scott so much as a Scot, and a Monty to boot.

oh you did better than I did

5 internet cookies to you

Don’t know how much this fits the community, as you use a lot of terms I’m not inherently familiar with (is there a “welcome guide” of some sort somewhere I missed)

first impression: your post is entirely on topic, welcome to the stubsack

techtakes is a sister sub to sneerclub (also on this instance, previously on reddit) and that one has a bit of an explanation. generally any (classy) sneerful critique of bullshit and wankery goes, modulo making space for chuds/nazis/debatelords/etc (those get shown the exit)

Now in 404media.

you use a lot of terms I’m not inherently familiar with (is there a “welcome guide” of some sort somewhere I missed).

we’re pretty receptive to requests for explanations of terms here, just fyi! I imagine if it begins to overwhelm commenting, a guide will be created. Unfortunately there is something of an arms race between industry buzzword generation and good sense, and we are on the side of good sense.

PC Gamer put out a pro-AI piece recently - unsurprisingly, Twitter tore it apart pretty publicly:

I could only find one positive response in the replies, and that one is getting torn to shreds as well:

I did also find a quote-tweet calling the current AI bubble an “anti-art period of time”, which has been doing pretty damn well:

Against my better judgment, I’m whipping out another sidenote:

With the general flood of AI slop on the Internet (a slop-nami as I’ve taken to calling it), and the quasi-realistic style most of it takes, I expect we’re gonna see photorealistic art/visuals take a major decline in popularity/cultural cachet, with an attendant boom in abstract/surreal/stylised visuals

On the popularity front, any artist producing something photorealistic will struggle to avoid blending in with the slop-nami, whilst more overtly stylised pieces stand out all the more starkly.

On the “cultural cachet” front, I can see photorealistic visuals becoming seen as a form of “techno-kitsch” - a form of “anti-art” which suggests a lack of artistic vision/direction on its creators’ part, if not a total lack of artistic merit.

Why is it always bioshock girl 😭

Another upcoming train wreck to add to your busy schedule: O’Reilly (the tech book publisher) is apparently going to be doing ai-translated versions of past works. Not everyone is entirely happy about this. I wonder how much human oversight will be involved in the process.

https://www.linkedin.com/posts/parisba_publications-activity-7249244992496361472-4pLj

translate technically fiddly instructions of the type where people have trouble spotting mistakes, with patterned noise generators. what could go wrong

Earlier today, the Internet Archive suffered a DDoS attack, which has now been claimed by the BlackMeta hacktivist group, who says they will be conducting additional attacks.

Hacktivist group? The fuck can you claim to be an activist for if your target is the Internet Archive?

Training my militia of revolutionary freedom fighters to attack homeless shelters, soup kitchens, nature preserves, libraries, and children’s playgrounds.

conservative who supports homeless shelters, soup kitchens, nature preserves, libraries, and children’s playgrounds for accelerationist reasons

The average orange site hacktivist libertarian. They are just mad about the hypocrisy you see.

(This post was sponsored by the hn guy who was mad at the tech guy who stopped doing startups as he had not given back all the money. Btw the actual communications of sn_blackmeta seem quite weird, talking about the global zionists the devil and having a certain ‘im 16 and this is deep and edgy quality’. For ex see this).

Maybe one day self-righteous computer crackers will get over the V for Vendetta, apocalypse cult, latin chanting, hooded robes, “we are anonymoos we are a legionella we do not frogger” aesthetic and I can stop cringing about it.

Fucking goofy ahh KKK shit.

You can say ass here

Yes but I like the “ahh”, it makes me imagine people doing a random little moan in the middle of the sentence.

I feel like the Internet Archive is a prime target for techfashy groups. Both for the amount of culture you can destroy, and because backed up webpages often make people with an ego the size of the sun look stupid.

Also, I can’t remember but didn’t Yudkowsky or someone else pretty plainly admit to taking a bunch of money during the FTX scandal? I swear he let slip that the funds were mostly dried up. I don’t think it was ever deleted, but that’s the sort of thing you might want to delete and could get really angry about being backed up in the Internet Archive. I think Siskind has edited a couple articles until all the fashy points were rounded off and that could fall in a similar boat. Maybe not him specifically, but there’s content like that that people would rather not be remembered and the Internet Archive falling apart would be good news to them.

Also (again), it scares me a little that their servers are on public tours. Like it’d take one crazy person to do serious damage to it. I don’t know but I’m hoping their >100PB of storage is including backups, even if it’s not 3-2-1. I’m only mildly paranoid about it lol.

it scares me a little that their servers are on public tours

frankly, the entire design of IA is more than a bit fucking stupid for the purpose it serves. “oh hey here’s the whole IA, right in this building over here” is just galaxybrained derpery

physical goods I can understand central-point (or some centralisation) in archive management, but ffs we’re multiple decades into knowing how to build things differently

(stance contextualisation: while I’m glad that the IA exists, I’m not an unreserved stan of it. there are a couple other notable concerns with it, alongside the thing I just mentioned)

@BlueMonday1984 whoever this dipshit is needs to fucking stop

Someone shared this website with me at work and now I am sharing the horror with you all: https://www.syntheticusers.com/

Reduce your time-to-insight

I do not think that word means what they think it means.

Emily Bender devoted a whole episode of Mystery AI Hype Theater 3000 to this.

I have nothing to add, save the screaming.

Synthetic Users uses the power of LLMs to generate users that have very high Synthetic Organic Parity. We start by generating a personality profile for each user, very much like a reptilian brain around which we reconstruct its personality. It’s a reconstruction because we are relying on the billions of parameters that LLMs have at their disposal.

They could’ve worded this so many other ways

But I suppose creepyness is a selling point these days

they put bullshit inside my bullshit industry for generating bullshit??? the investors are delighted!

the only positive is that their carousel pitch is at least honest about the desire

and that list of companies who’ve supposedly used it is telling, I guess

the rest of this….oh dear god

Online art school Schoolism publicly sneers at AI art, gets standing ovation

And now, a quick sidenote:

This is gut instinct, but I’m starting to get the feeling this AI bubble’s gonna destroy the concept of artificial intelligence as we know it.

Mainly because of the slop-nami and the AI industry’s repeated failures to solve hallucinations - both of those, I feel, have built an image of AI as inherently incapable of humanlike intelligence/creativity (let alone Superintelligencetm), no matter how many server farms you build or oceans of water you boil.

Additionally, I suspect that working on/with AI, or supporting it in any capacity, is becoming increasingly viewed as a major red flag - a “tech asshole signifier” to quote Baldur Bjarnason for the bajillionth time.

For a specific example, the major controversy that swirled around “Scooby Doo, Where Are You? In… SPRINGTRAPPED!” over its use of AI voices would be my pick.

Eagan Tilghman, the man behind the

slaughteranimation, may have been a random indie animator, who made Springtrapped on a shoestring budget and with zero intention of making even a cent off it, but all those mitigating circumstances didn’t save the poor bastard from getting raked over the coals anyway. If that isn’t a bad sign for the future of AI as a concept, I don’t know what is.I think a couple of people noted it at the start, but this is truly a paradigm shift.

We’ve had so many science fiction stories, works, derivatives, musing about AI in so many ways, what if it were malevolent, what if it rebelled, what if it took all jobs… But I don’t think our collective consciousness was aware of the “what if it was just utterly stupid and incompetent” possibility.

I don’t think our collective consciousness was aware of the “what if it was just utterly stupid and incompetent” possibility.

Its a possibility which doesn’t make for good sci-fi (unless you’re writing an outright dystopia (e.g. Paranoia)), so sci-fi writers were unlikely to touch it.

The tech industry had enjoyed a lengthy period of unvarnished success and conformist press up to this point, so Joe Public probably wasn’t gonna entertain the idea that this shiny new tech could drop the ball until they saw something like the glue pizza sprawl.

And the tech press isn’t gonna push back against AI, for obvious reasons.

So, I’m not shocked this revelation completely blindsided the public.

I think a couple of people noted it at the start, but this is truly a paradigm shift.

Yeah, this is very much a paradigm shift - I don’t know how wide-ranging the consequences will be, but I expect we’re in for one hell of a ride.

Paranoia is the only one I can think of that’s actually pretty well on the money because the dystopian elements come from the fact that the wildly incompetent friend computer has been given total power despite everyone on some level knowing that fact, even if they can’t admit it (anymore) without being terminated. The secret societies all think they can work the situation to their advantage and it provides a convenient scapegoat for terrible things they probably want to do anyways.

Alan Moore wrote a comic book story about AI about 10 years ago that parodied rationalist ideas about AI and it still holds up pretty well. Sadly the whole thing isn’t behind that link - I saw it on Twitter and can’t find it now.

Not a sneer, but I saw an article that was basically an extremely goddamn long list of forum recommendations and it gave me a warm and fuzzy feeling inside.

That’s awesome. Lemmy is great, but old-school forums are just something else.

For a burst of nostalgia for at least some of you nerds (lovingly), let me add forums.spacebattles.com to the list

If you mention SpaceBattles we also need to add Sufficient Velocity for completeness’s sake.

There’s another one that focuses mostly on erotic fiction but since that’s not really my bag I’ve forgotten what it’s called. And I think it’s not as big as SB and SV anyway since that user base is mostly on AO3 these days.

Nobody likes Bryan Johnson’s breakfast at the Network School

A cafe run by immortality-obsessed multi-millionaire Bryan Johnson is reportedly struggling to attract customers with students at the crypto-funded Network School in Singapore preferring the hotel’s breakfast buffet over “bunny food.”

I did not expect to be tricked into reading about the nighttime erections of the man with the most severe midlife crisis in the world.

he has 80% fewer gray hairs, representing a “31-year age reversal”

According to Wikipedia this guy is 47. Sorry about your hair as a teenager I guess? I hope the early graying didn’t lead to any long term self-esteem issues.

Alternatively, he only had 5 gray hairs to begin with I guess? I’m more concerned about the fact that he’s apparently taking time to set a timer whenever he gets hard at night. I don’t want to yuck anyone’s yum, but I’m pretty sure you’re doing it wrong if you’re taking time out of the experience to collect those metrics.

If he collects enough metrics, he could make a horrendously cursed blogpost out of it like Aella

The Network School offers Johnson’s healthy food and a fitness program called the Blueprint Protocol. He claims that after three years of following his blueprint the duration of his night-time erections totals 179 minutes, “better than the average 18-year-old”

Yeah, this is a very normal diet that’s advertising itself in very normal ways.

I’m trying to imagine the kind of wacky gross VR body tracking setup you’d need to measure that metric while asleep and all I’m coming up with is mutilated Powerglove

that’s because you’re discounting

quantified dating, and the scorecard he gives partners to fill out

what is the utility of 179 minutes of night-time erections

as a warning to others

my first thought reading this was that you meant it could be a deterrent to burglars. then I imagined a pair of increasingly nervous burglars timing his erections and freaking out as it hit 179 minutes. “we gotta bail man, that’s longer than the average 18 year old”

When I was adjusting to a high fiber diet for medical reasons I couldn’t figure out why I was so incredibly hungry despite eating enough.

Then I realized “huh, I haven’t had any fat at all for the past week” and went and made myself four slices of buttery toast and they were so tasty.

I want a menu!

What do you think is the venn diagram of “people who go to The Network School” and “men who believe in the meat-only diet”? I imagine there’s a lot of crossover

Now if it was Brian Johnson from AC/DC, I bet it’d be awesome.

Any mild pushback to the claims of LLM companies sure bring out the promptfondlers on lobste.rs

https://lobste.rs/s/qcppwf/llms_don_t_do_formal_reasoning_is_huge

Plenty of agreement, but also a lot of “what is reasoning, really” and “humans are dumb too, so it’s not so surprisingly GenAIs are too!”. This is sure a solid foundation for multi-billion startups, yes sirree.

it’s kind of comforting that the current attitude towards generative AI in some tech spaces is “of course it can’t do cognition and it isn’t really good for anything, who said it was” which is of course rich from the exact same posters who were breathlessly advertising for the tech as revolutionary both online and at work as recently as a couple of weeks ago (and a lot of them still hedge it with “but it might be useful in the near future”). the comfort is it feels like that attitude comes from deep embarrassment, like how the orange site started claiming it is and always was skeptical of crypto once the technology got irrevocably associated with scams and gambling and a lot of the easy money left

yeah, there’s a stench of desperation from the defenders

of course, as with crypto, there are uses (in the case of crypto , nothing legitimate). And it will be going to be a fallback for fondlers to point them out (for example, I believe that auto-generated audiobooks are viable, if they’re generated from actual books)

I was watching a h0ffman stream the other day when someone happened to bring up autoplag in some context. didn’t see the asking context, but h0ffman’s answer warmed my heart. paraphrased: “what would you want to use that for? you wouldn’t steal a mod, why would you want to use a prompt? that stole from artists. fuck that shit.”

(h0ffman’s one of the names in the demoscene, often plays sets at compos, does some of his own demos, etc)

these chuds lack self awareness and they never realise that by moving the goalposts on brain stuff they are admitting their own idiocy.

Many thanks to @blakestacey and @YourNetworkIsHaunted for your guidance with the NSF grant situation. I’ve sent an analysis of the two weird reviews to our project manager and we have a list of personnel to escalate with if we can’t get any traction at that level. Fingers crossed that we can be the pebble that gets an avalanche rolling. I’d really rather not become a character in this story (it’s much more fun to hurl rotten fruit with the rest of the groundlings), but what else can we do when the bullshit comes and finds us in real life, eh?

It WAS fun to reference Emily Bender and On Bullshit in the references of a serious work document, though.

Edit: So…the email server says that all the messages are bouncing back. DKIM failure?

Edit2: Yep, you’re right, our company email provider coincidentally fell over. When it rains, it pours (lol).

Edit3: PM got back and said that he’s passed it along for internal review.

I’d really rather not become a character in this story

Good luck. In my experience you can’t speak up about stuff like this without putting yourself out there to some degree. Stay strong.

Regarding the email bounceback, could you perhaps try sending an email from another address (with a different host) to the same destination to confirm it’s not just your “sending” server?

The bounceback should have info in it on the cause, and DKIM issues should result in a complaint response from the denying recipient server.

from this article

Amazon asked Chun to dismiss the case in December, saying the FTC had raised no evidence of harm to consumers.

ah yes, the company that’s massively monopolized nearly all markets, destroyed choice, constantly ships bad products (whose existence is incentivised by programs of its own devising), and that has directly invested in enhanced price exploitation technologies? that one? yeah, totes no harm to consumers there

Just something I found in the wild (r/machine learning): Please point me in the right direction for further exploring my line of thinking in AI alignment

I’m not a researcher or working in AI or anything, but …

you don’t say

Alignment? Well, of course it depends on your organization’s style guide but if you’re using TensorFlow or PyTorch in Python, I recommend following PEP-8, which specifies four spaces per indent level and…

Wait, you’re not working in AI the what are you even asking for?

Good sign of a crank is wanting to solve the biggest problem in the field (or more like several fields) with this one easy trick. Amazing others are now following yuds lead.

I think in this case the crankery is wanting to solve a problem that doesn’t even exist, i.e. “how to make sure the machine god is not evil”