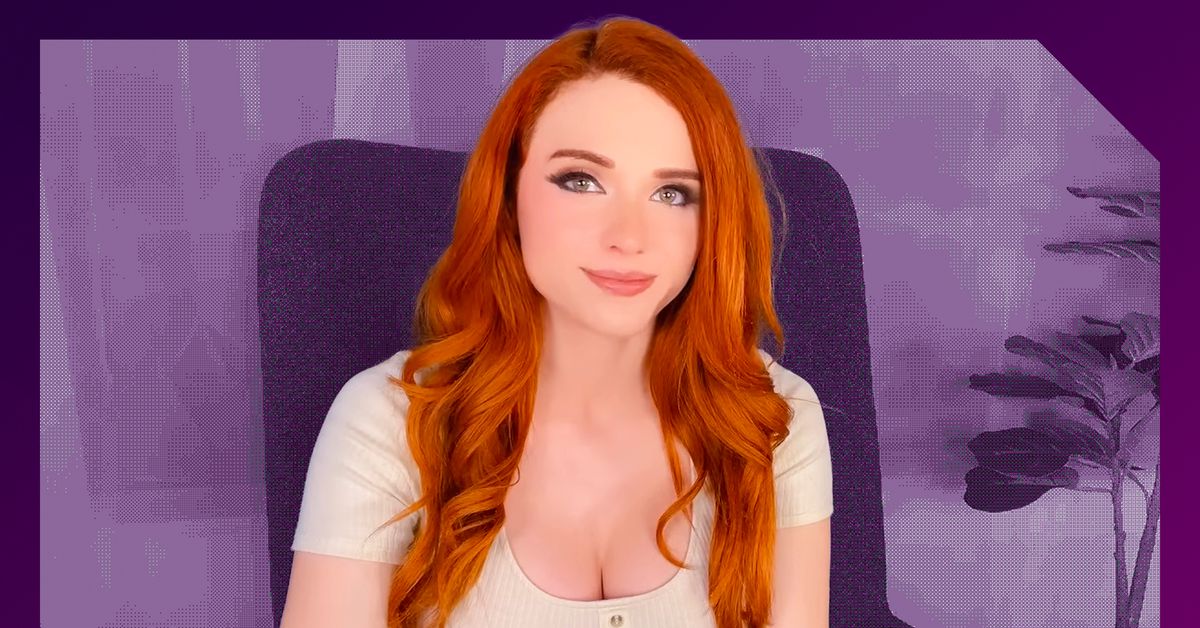

there is… a lot going on here–and it’s part of a broader trend which is probably not for the better and speaks to some deeper-seated issues we currently have in society. a choice moment from the article here on another influencer doing a similar thing earlier this year, and how that went:

Siragusa isn’t the first influencer to create a voice-prompted AI chatbot using her likeness. The first would be Caryn Marjorie, a 23-year-old Snapchat creator, who has more than 1.8 million followers. CarynAI is trained on a combination of OpenAI’s ChatGPT-4 and some 2,000 hours of her now-deleted YouTube content, according to Fortune. On May 11, when CarynAI launched, Marjorie tweeted that the app would “cure loneliness.” She’d also told Fortune that the AI chatbot was not meant to make sexual advances. But on the day of its release, users commented on the AI’s tendency to bring up sexually explicit content. “The AI was not programmed to do this and has seemed to go rogue,” Marjorie told Insider, adding that her team was “working around the clock to prevent this from happening again.”

This strikes me as very exploitative. Capitalizing on loneliness to enrich yourself gives me bad vibes (especially when the users of this thing will actually be worse off mentally in the end, as @cnnrduncan mentioned).

I have no doubts that for some users, it’ll turn into a cycle. They’ll feel lonelier each time they use it, which pushes them to use it more, and so on. Amouranth does nothing to protect these vulnerable users. I had the same feelings about Replika years before ChatGPT became a thing.