The rule doesn’t say posts have to be images, so have this thing I wrote in a text file a couple of years ago and never got around to redrafting.

A: When it comes to downloading the entire internet, there are problems.

For the sake of argument, let’s define “the internet” as “the surface web”. Y’know, what our parents think of as “the internet”. It turns out we’ll face some extra problems if we define it as “everything stored on every computer currently connected to the internet”, namely how to find it all, so let’s just go with the surface web because this is pretty intuitive, and it sounds like it’s do-able. Right?

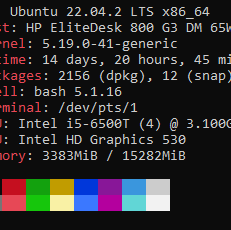

The first problem is disk space. To do a right-click “Save as” on the whole internet, you’re gonna need somewhere to store it. You can use zfs to squash it all down, remove redundant data, that sort of thing, but ultimately, you’re going to need a lot of disk space. My first computer had no hard drive. My second computer had a 720MB hard drive. My current computer has about 20TB, which is a lot. But on the grand scale of things, that’s not even enough to download all of Google, and Google is just one website. [citation needed]

Let’s say, just so we can get past this problem, that you don’t care about storing it. You just want to download it for the sake of downloading it, and you’ll be satisfied if all the ones and zeros come down the wire at some point. Suddenly the first problem goes away and this ultimately-pointless task becomes even more pointless because, at the end of it, you won’t possess the entire internet.

The second problem is Cloudflare. Cloudflare is a big problem. It also will hinder your downloading ambitions. It turns out they “protect” [citation needed] about 20% of all websites, and try to prevent users from doing things like automated browsing, or DDOS attacks, or using screen readers, or downloading the entire internet. You would need to solve Cloudflare. And that’s very difficult: after all, if it were easy, Cloudflare would be circumvented on a regular basis, which would mean Cloudflare is little more than a protection racket with bad PR. And I would never, ever, ever, accuse Cloudflare of being a protection racket, because I don’t want to wake up with a server’s head in my bed. There are also smaller competitors to Cloudflare, some of which will pose you a real challenge.

But let’s assume you’ve circumvented Cloudflare, along with its smaller competitors. Now, it’s finally possible to start the download. There isn’t a button you can click that says “download the internet” so you’ll need to install a specialised tool. HTTrack is one such tool, it’s what’s called a “web crawler”, it’ll visit a web site, intelligently follow links, and store everything it sees in a form you can browse on your computer. You’ll need to get it to not do the last bit, because you don’t care about storing, you just want it to be downloaded. You also need to tell it what, specifically, you want it to download - “the whole internet” isn’t a default option for some bizarre reason - but as you’ve got this far, a complete list of currently registered domain names should be trivial for you to obtain by comparison. Feed that list into HTTrack, sit back, and watch the bits flow.

The first thing you’ll notice is that you’re going to be watching it for quite a while. Even if you have a 10 gigabit connection, your peak transfer rate will be 1.25GB per second. In optimal circumstances, a terabyte would take you a little over two hours, so you’d be looking at a theoretical maximum on the order of 10TB per day. But even leaving aside things like delays in getting responses, you can bet a lot of websites won’t let you download at anywhere near that speed.

In fact, it turns out we’ve finally reached a problem that we can’t solve, or even handwave away - the march of time. The internet is constantly changing, with information being added and deleted at breakneck speed. The English Wikipedia alone receives roughly 2 edits per second. So, every time you’re close to finished, you’ll find you still have more to do, and because HTTrack is dutifully following every link it encounters, the task of downloading the internet is one that it will never be able to complete.

Therefore, it is not possible to download the entire internet. Sorry.

Just click ctrl s on every page, why this

Is this written by gpt3.5? So many things wrong here

I don’t see how it could’ve been. gpt3.5 wasn’t around a couple of years ago.

If you download the internet to the cloud, then you have double the internet. Repeat several times you can crash the Matrix.

You can download the entirety of Wikipedia without pictures for less than 2 TB of storage.

just download it on floppy disks dummy

Moldy